o Write throughput 106MB/s (customer app) vs

- 657MB/s (customer database) – 619% slower

- 433MB/s (Claremont NFS) – 408% slower

o Read throughput 34MB/s (customer app) vs

- 2662MB/s (customer database) – 7830% slower

- 434MB/s (Claremont NFS) – 1276% slower

These headline figures were backed up with a drill-down analysis into individual tests which all showed a huge drop in write and (especially) read performance for the customer’s application servers with NFS attached storage that we just didn’t see with our ones in the Claremont Cloud.

We had now been able to isolate the problem and quantify the impact with actual vs expected performance.

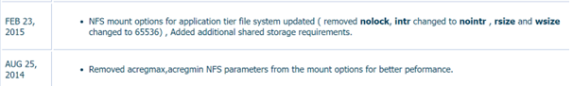

NFS Mount Point options

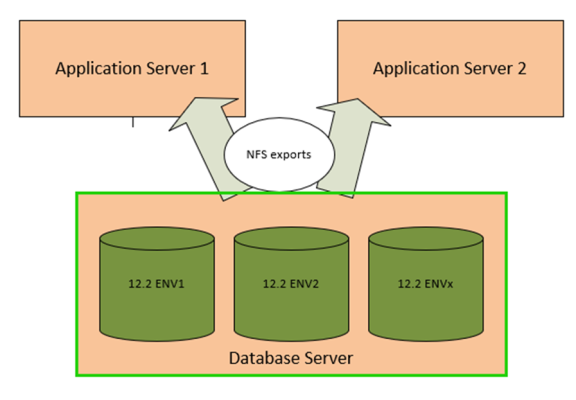

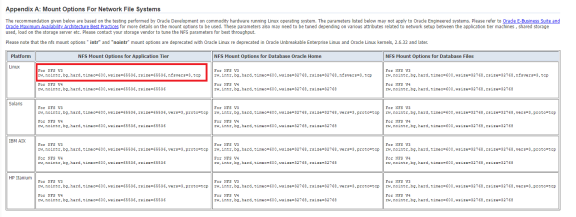

The next step of the investigation was to determine why the customer’s system experienced such a significant drop-off in performance on NFS attached storage, compared to the equivalent system in the Claremont Cloud which did not.

One obvious difference straight away was the mount point options used on the application servers.

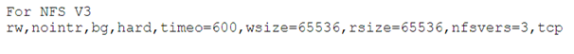

On the Claremont server, we were using NFS mount options:

rw,nointr,bg,hard,timeo=600,wsize=65536,rsize=65536 0 0

But the customer was using:

rw,hard,intr,bg,timeo=600,rsize=32768,wsize=32768,nfsvers=3,tcp,nolock,acregmin=0,acregmax=0

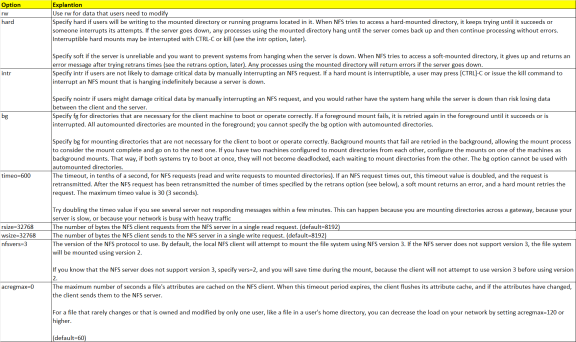

What do all these mean?