Oracle Managed Services

Our team of specialists provide clients with cost-effective and first-class Oracle functional, technical, database and infrastructure managed services.

Oracle Consulting Services

We deliver a wide range of Oracle E-Business engagements including health checks, implementations, R12.2 upgrades and optimisation activities that drive your business.

Why Claremont?

An award-winning Oracle Managed Services Provider, our team of experts help customers to get the most out of their investment in Oracle technology, deliver an unrivalled customer service and have a proven track record of delivery. Learn More

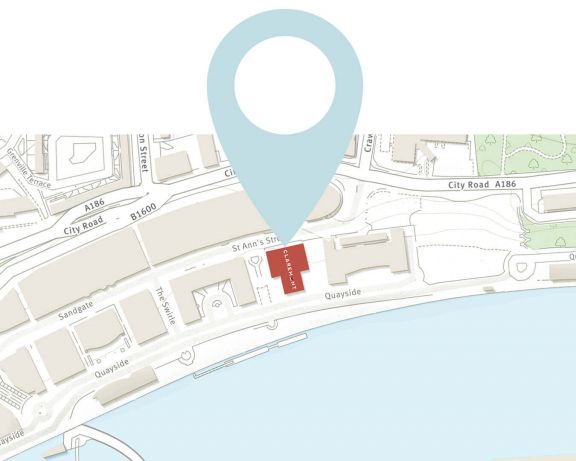

Claremont at a glance

Claremont is the leading independent Oracle Managed Services provider in the UK. Our award-winning team is driven by delivering the highest levels of service to our customers, working collaboratively with them to maximise business benefits through efficient and effective use of Oracle technology.

Oracle EBS

Claremont has a strong focus on providing Oracle E-Business services. Our knowledge and skills are uniquely placed to help organisations to leverage the best value from their Oracle investment.

Oracle Cloud Applications

As one of the first organisations in the UK to implement and support Oracle Cloud Applications, Claremont has the credentials to help you get the most from your investment in the product.

Realise your investment in Oracle’s technology

Over the years, Claremont has worked with many customers in the private sector across many industry verticals, including: Financial Services, Food & Beverage, Higher Education, High Tech, Professional Services, Retail, Telecommunications, Travel & Transport and Manufacturing.

First-class services at affordable prices

Claremont works with key public sector organisations helping them gain the most out of their Oracle investment. We also ensure that Data Protection is embedded into our services and we are ISO27001 certified for your peace of mind on Information Security. Claremont is a Crown Commercial Service Supplier, and therefore all of our services are readily available to be procured through G-Cloud 13.

Finance & Procurement

Drive finance and procurement process efficiency to improve business outcomes and ensure compliance with international reporting standards through use of Oracle Financials and Procurement.

Human Resources

Unleash the potential of your workforce by streamlining and optimising your HR processes by harnessing our extensive operational experience in Oracle HCM and Payroll.

5 things to talk to us about at Oracle Applications Unlimited Day

Oracle Applications Unlimited Day is taking place on Tuesday, 14th November, and we're thrilled to…

5 things we learnt at Oracle5: Live

Explore the key insights gained from Oracle5: Live in this blog. Discover the latest trends,…

Claremont Wins Gold!

Claremont, the premier Oracle E-Business Suite solutions provider in the UK, has received tremendous…

Oracle Error Correction Support for Oracle EBS R12.2

Oracle announces that from July 2024, the Error Correction Support Baseline (ECS) for Oracle EBS…

Happy Clients

Our clients have peace of mind because they enjoy first class support from the leading provider of Oracle Managed Services. Here are just a few of the comments we’ve recently received from our customers.

Superdrug

"Our decision to choose Claremont as our Oracle partner has been a good move: Their Oracle E-Business managed service provision is excellent, which has helped transform the service delivered to all European Finance teams. They completed an Oracle E-Business R12.2 upgrade on time, to budget, and with no issues, delivering a super smooth transition".

IT Director

Superdrug

"Our decision to choose Claremont as our Oracle partner has been a good move: Their Oracle E-Business managed service provision is excellent, which has helped transform the service delivered to all European Finance teams. They completed an Oracle E-Business R12.2 upgrade on time, to budget, and with no issues, delivering a super smooth transition".

IT Director

Angel Trains

"A breath of fresh air and a real pleasure to work with Claremont over the last 4 years. What really made the difference was the professional, helpful and friendly people involved, a real differentiator."

Head of IT

Angel Trains

"A breath of fresh air and a real pleasure to work with Claremont over the last 4 years. What really made the difference was the professional, helpful and friendly people involved, a real differentiator."

Head of IT

Go Ahead

"I can honestly say that using Claremont was the best money I have ever spent. Claremont promised and Claremont delivered."

Group Head of Enterprise Systems

Go Ahead

"I can honestly say that using Claremont was the best money I have ever spent. Claremont promised and Claremont delivered."

Group Head of Enterprise Systems

National Trust

"Selecting Claremont has turned out to be one of the best procurement decisions we have made in recent times: They employ good people and have been completely committed to our mutual success."

Chief Information Officer

National Trust

"Selecting Claremont has turned out to be one of the best procurement decisions we have made in recent times: They employ good people and have been completely committed to our mutual success."

Chief Information Officer

Superdrug

"Very happy with the way things are going. We are getting good quality answers in reasonable amounts of time. All very good."

Group Head of IT

Superdrug

"Very happy with the way things are going. We are getting good quality answers in reasonable amounts of time. All very good."

Group Head of IT

Sony

"Our work with Claremont has given us the insight and support that our business needs. First rate consultants, who are extremely responsive and who provide real business solutions."

Head of IT Operations

Sony

"Our work with Claremont has given us the insight and support that our business needs. First rate consultants, who are extremely responsive and who provide real business solutions."

Head of IT Operations

Stagecoach

"Claremont is a trusted supplier to Stagecoach, deliver excellent support and provide a proactive service."

Managing Director

Stagecoach

"Claremont is a trusted supplier to Stagecoach, deliver excellent support and provide a proactive service."

Managing Director

Suffolk County Council

"Just wanted to say a huge thank you on behalf of all of us here to you and the team at Claremont for all the support you've given us this year."

IT Business Systems & Device Manager

Suffolk County Council

"Just wanted to say a huge thank you on behalf of all of us here to you and the team at Claremont for all the support you've given us this year."

IT Business Systems & Device Manager